How we spent 500 hours for writing tests and proved this to be a business value

A project owner doesn’t see any business value in adding automated tests to a project - this problem concerns many projects and software developers. I believe we all agree that it should be natural for developers to include unit tests into tasks estimations which may not even be shown to the customer But this might not be the case when it comes to more time-consuming test types, like integration tests or end-to-end tests. Sometimes a lot of data preparation is required before such a test can be implemented. You have to face a problem that your end-to-end test depends on many external services where all of them must be synchronized during its execution. This can be a real struggle. Implementation of the test can take dozens of hours and in the end, it might not even work because one of external systems is impossible to be automated. The purpose of this article is to present how proving the value of such tests can allow you to implement more and more integration / end-to-end tests. This is what, after all, can make your project owner happy.

Plan

In the beginning you need to know what you would like to test. Probably the worst thing you can do is to choose the most difficult part of your system and start working on test automation for this part. It is a much better idea to start with the part that doesn’t require so much work. It’s easier to include such a task into a sprint and it's more likely that in the end, this test will work. It's also a good idea to discuss with someone the part of the system you would like to test, like asking your QA about the most important test scenarios they do every week / every release. You should think about a test that is quite easy to implement and, at the same time, covers an important test scenario. In our project, we have an every-week test scenario, it's called "Sanity Check”. It’s a set of 13 tests that verify if most important features of the app work. Our policy and software development process says that the app can be released only when this test set is passed in 100%. This is our main regressions prevention mechanism. Some of these tests are pretty straightforward, like verification of account creation. But some of them are much more complex, like verifying a whole room reservation check-in flow. Definitely you should start with the account creation test and then, plan implementation of others in future sprints.

Proof

Test Coverage

This is probably the first thing you, and most of the software developers community, will consider when it comes to providing a proof of tests value. Keep in mind, that this is only a number, which basically can be easily either wrongly calculated or misunderstood. Let's imagine you present such a coverage report to your customer:

SomeFancyApp iOS app UI Tests results for branch 'master':

https://coverage-report-for-particular-methods-and-functions.html

SomeFancy.app: 65.69%

Besides seeing a percentage value of the code covered by tests, we also share individual functions and methods coverage. Somewhere deep in our hearts, we all feel that our customer wouldn’t understand what each method really does but we are still deceiving ourselves that showing test coverage of it introduces some value. Let's think about other possibilities to prove the importance of our automated tests.

More verbal reports

We thought that we need something different, something that non-technical person can read and understand. We wanted everyone to understand what the exact test scenario is, its steps, result, and how much time it takes to execute.

This "humanization" of test reports was incredibly difficult and time-consuming, but after a few iterations, we came with great results that our customer understands and what's most important - sees the business value of the tests.

We actually implemented a whole system for collecting reports, which are submitted during every Continuous Integration execution.

This system works across many projects in our company, but there are different tools widely available, like TestRail or Xray, which are even more powerful, you can buy their licenses instead of implementing your own approach.

We decided that our ideal app build description should contain:

- Release notes grouped by fixes and features (using conventional commits)

- Sanity check results for all 13 test scenarios

- List of all UI tests where everyone of them includes a description, a list of all its steps, and all its assertions. Every test is marked as either success or as a well-described failure

- Snapshots of every screen of the app (that can be manually reviewed by designers)

- Coverage report (we decided to add this but it’s useful only for developers)

How does it work?

Basically, we collect Markdown fragments during several parallel Continuous Integration steps. After the whole CI workflow execution, the combined markdown is sent as a Confluence page. A link to the newly created Confluence page is posted on Slack channel informing developers, testers, and other team members that the new build is available.

We collect Markdown fragments from the following jobs in our CI workflow:

- unit tests

- end-to-end tests (UI tests on real services)

- integration tests (UI tests on mocked services)

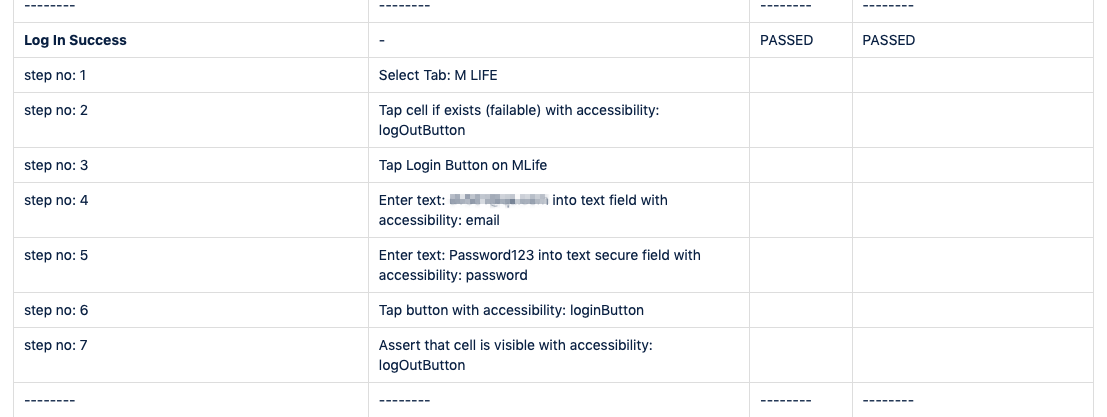

In the end we saw that the customer was happy the most about being able to see exact test scenario steps, which in practice looks like in the picture below:

Possibly, even if you are not a software developer, you're able to understand this without asking any engineers, even when these steps are automatically generated and do not sound natural. The 3rd and the 4th column informs about the result of a test while running the same scenario in mocked (integration test) and real environments (end-to-end test).

Time savings

Before our automation, we had to spend 4-5 hours on performing “Sanity Check” manually to provide a release candidate. We had to perform the same for both Android and iOS applications. We typically release around 7 builds per month for each platform. After multiplying all the values together it gives around 70 hours spent monthly on performing sanity checks.

As I mentioned in the beginning - we have measured that we spent around 500 hours implementing these tests. No customer would allow us to spend so much time without serious reasons… As you can quickly calculate, this starts to constitute savings after around 10 months but of course, it's not only about time savings and app stability…

We’ve learned a lot

While implementing such tests certainly gives the value for the customer and saves their money, it also gives a significant value for developers. We have much better knowledge about systems we use, which is also useful for application implementation details. We can handle more edge cases because of the knowledge which can make more users happy about using an app we develop, which after all makes a customer happy too. Besides strictly coding mobile platform related tests, we were also forced to integrate with dozens of external systems to provide test data. This is actually project-specific because the application is mostly about room reservations which are valid only for a few days, so we need to prepare such a valid reservation. Doing this is very, very complicated because of many systems that must be synchronized with each other.

And here we come to the most interesting part for customers, which is allowing your programmers to develop themselves by experimenting with your product (in our case it's a mobile app) actually makes your app better. This is a very important conclusion that we wouldn't be able to achieve without huge determination and constant encouraging customer to actually pay for writing more tests. Keep in mind, though, that we started with very small steps, running a very simple test for creating an account that does not require any external systems integrations but is a key feature of the product development.

Conclusion

The more you will think about this topic the more you will understand that a customer may just not know that tests are important for making the final product great and it needs time to do this. The simplest solution for that problem is trying to prove this value, and show that this is actually a huge boost for all developers and the QA team, like we did in the story above.

But, wait. Can I convert this "tests" idea to other software development related topics? Of course! That's what is great about this story, you can do exactly the same for changing architecture, refactoring, environment building, paying off technological debt, etc… These are all the same problems - a customer just does not see any value in doing this and you just need to open their eyes to it.

The value you deliver is always divided by what your customer believes the value is…

I hope I've encouraged you to take a step back and think about and how to become a real hero for your customer and at the same time constantly develop yourself.

At the end of the story I would like to thank my whole team for this hard work, mostly to Jacek Marchwicki and Piotr Mądry for great ideas and creating a lot of tooling behind this to communicate to external services and generate Confluence page from reports. This is really inspiring how great tools these guys created and I hope it will inspire you too!