If someone googles how to increase a topic partition count of a Kafka topic running on a Confluent platform, they will find dozens of links. Some sources will say that it is impossible and that a migration to a new topic is required. Others will mention a kafka-cli toolset without explaining how to configure and run it locally. Some describe the process, but it can be quite complicated - involving 15 steps and requiring several tools (java, kafka broker) to be installed on your local machine.

However, it can be simpler than that.

Kafkactl

Here's a tool called kafkactl, that you might find helpful. It's a simple CLI tool that allows you to interact with a Kafka cluster. Unlike the Confluent CLI, it enables you to increase the partition count of an existing topic. Additionally, it's much easier to install and configure than the Kafka CLI.

You don't even have to install it. You can simply run it through Docker by using the following command:

docker run deviceinsight/kafkactl get topics

However, before you do so, there’s one more thing you have to do - configure Kafkactl, so that it knows how to connect to the Kafka cluster.

Connecting Kafkactl to a Kafka cluster on Confluent Cloud

To configure Kafkactl, first you need to grab credentials to your Kafka cluster.

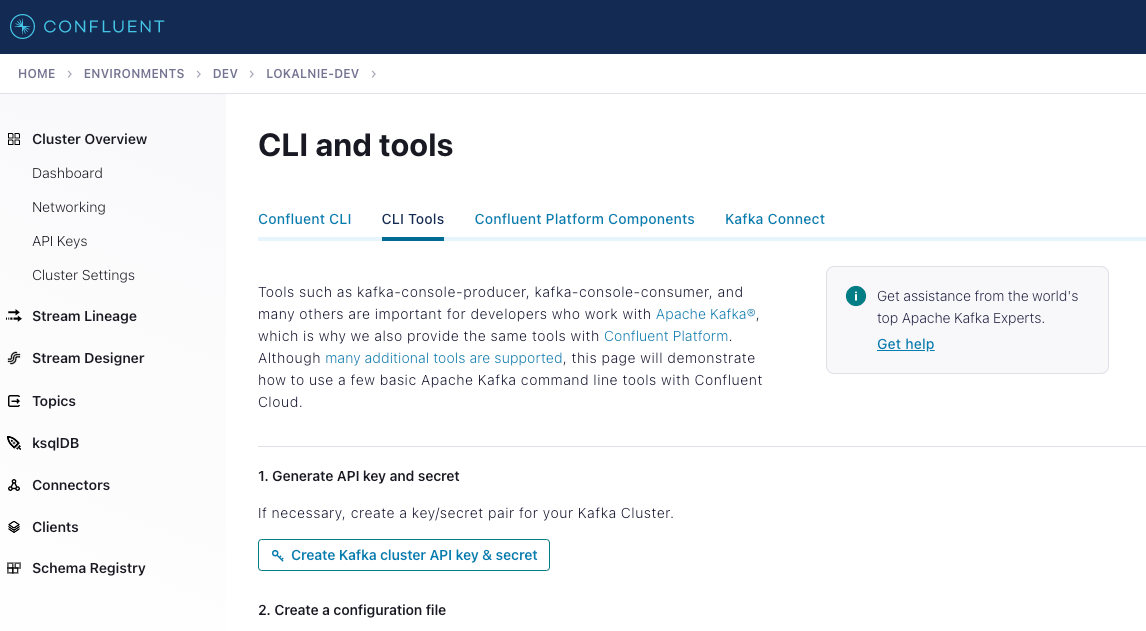

If you login into Confluent Cloud console, you can find a "CLI and Tools" page there that describes how to configure various CLI tools so that they can connect to the Kafka cluster running on Confluent Cloud.

Unfortunately, it doesn’t describe how kafkactl can be configured. Figuring it out by myself wasn’t super hard, but I’d say hard enough to write an article about this. ;)

In short, to configure Kafkactl so that it connects to a Kafka cluster on Confluent Cloud:

- In Confluent Cloud console, go to Home → Environments → your environment → CLI and tools.

- In “CLI Tools”, generate API key and secret (username and password).

- Create a

kafkactl.ymlconfig on your machine like this:

contexts:

default:

brokers:

- BROKER_URL # "bootstrap.servers" from the "Create a configuration file" section

tls:

enabled: true

sasl:

enabled: true

username: API_KEY

password: API_SECRET

current-context: default

Then, to run kafkactl using docker with the given configuration file, run the following command:

docker run -v (pwd)/kafkactl.yml:/etc/kafkactl/config.yml deviceinsight/kafkactl get topics

Increasing the topic partitions count

Finally, to increase the partition count of an existing topic using kafkactl, you can run this:

docker run -v (pwd)/kafkactl.yml:/etc/kafkactl/config.yml deviceinsight/kafkactl alter topic TOPIC_NAME -p PARTITION_COUNT

# example

docker run -v (pwd)/kafkactl.yml:/etc/kafkactl/config.yml deviceinsight/kafkactl alter topic payments -p 10

Before running this operation, it is important to be aware of the pitfalls of increasing the topic partition count. In summary:

- The partition count can only be increased, not decreased. This means that you cannot revert to the old number of partitions. Therefore, it is important to make a well-informed decision on the desired partition count for your topic. A helpful resource for determining this is this article.

- The order of events may no longer be guaranteed, as producers before and after the "increase partition count" operation may save events to separate partitions.

If the second limitation is not feasible for you, try something else: migrating to a new topic instead of increasing the partition count of an existing topic. However, this may be an organizational burden, so it may be easier to avoid it by reworking your Kafka consumer code to handle events that arrive in the wrong order.

Running kafkactl for other means

Of course, feel free to use kafkactl for other means. To make the above command easier, we’ve set up a shell script to simplify calling it:

#!/bin/sh

set -xefuo pipefail

KAFKA_ENDPOINTS=${KAFKA_ENDPOINTS:?"KAFKA_ENDPOINTS env var missing"}

KAFKA_CLIENT_ID=${KAFKA_CLIENT_ID:?"KAFKA_CLIENT_ID env var missing"}

KAFKA_SECRET=${KAFKA_SECRET:?"KAFKA_SECRET env var missing"}

docker run \

-e "BROKERS=$KAFKA_ENDPOINTS" \

-e "TLS_ENABLED=true" \

-e "SASL_ENABLED=true" \

-e "SASL_USERNAME=$KAFKA_CLIENT_ID" \

-e "SASL_PASSWORD=$KAFKA_SECRET" \

deviceinsight/kafkactl $@

You can save this script for example at bin/kafkactl path in your project, and then run it like this:

bin/kafkactl get topic

Easy, right? 🙂 Just make sure you set up KAFKA_ENDPOINTS KAFKA_CLIENT_ID KAFKA_SECRET beforehand in your shell.

Need help? We can assist.

If you need assistance installing and configuring a Kafka cluster, or running an application that handles millions of events, feel free to contact us at AppUnite. Our team has experience running such a backend for the largest e-commerce in Poland - Allegro. We can help you optimize your Kafka setup and ensure that your event streaming architecture is performant and scalable.